Over the past two weeks I significantly sped up two of my Toolforge tools by using Redis, a key-value database. The two tools, checker and shorturls were slow for different reasons, but now respond instantaneously. Note that I didn't do any proper benchmarking, it's just noticably faster.

If you're not familiar with it already, Toolforge is a shared hosting platform for the Wikimedia community build entirely using free software. A key component is providing web hosting services so developers can build all sorts of tools to help Wikimedians with really whatever they want to do.

Toolforge provides a Redis server (see the documentation) for tools to use for key-value caching, pub/sub, etc. One important security note is that this is a shared service for all Toolforge users to use, so it's especially important to prefix your keys to avoid collisions. Depending on what exactly you're storing, you may want to use a cryptographically-random key prefix, see the security documentation for more details.

Redis on Toolforge is really straightforward to take advantage of for caching, and that's what I want to highlight.

Visit the tool — Source code

checker is a tool that helps Wikisource contributors quickly see the proofread status of pages. The tool was originally written as a Python CGI script and I've since lightly refactored it to use Flask and jinja2 templates.

On each page load, checker would make a database query to get the list of all available wikis, and then an additional query to get information about the selected wiki and an API query to get namespace information. This data is basically static, it would only change whenever a new wiki is created, which is rare.

<+bd808> I think it would be a lot faster with a tiny bit of redis cache mixed in

I used the Flask-Caching library, which provides convenient decorators to cache the results of Python functions. Using that, adding caching was about 10 lines of code.

To set up the library, you'll need to configure the Cache object to use tools-redis.

from flask import Flask

from flask_caching import Cache

app = Flask(__name__)

cache = Cache(

app,

config={'CACHE_TYPE': 'redis',

'CACHE_REDIS_HOST': 'tools-redis',

'CACHE_KEY_PREFIX': 'tool-checker'}

)

And then use the @cache.memoize() function for whatever needs caching. I set an expiry of a week so that it would pick up any changes in a reasonable time for users.

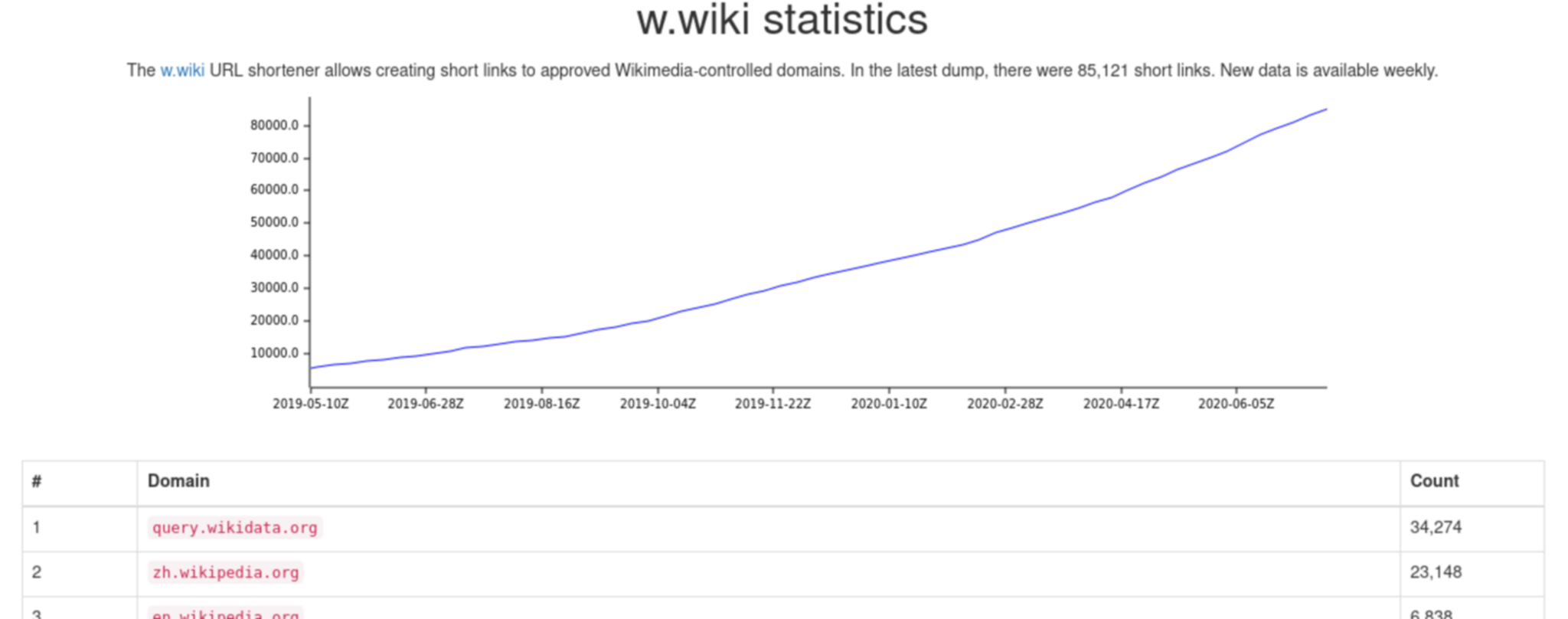

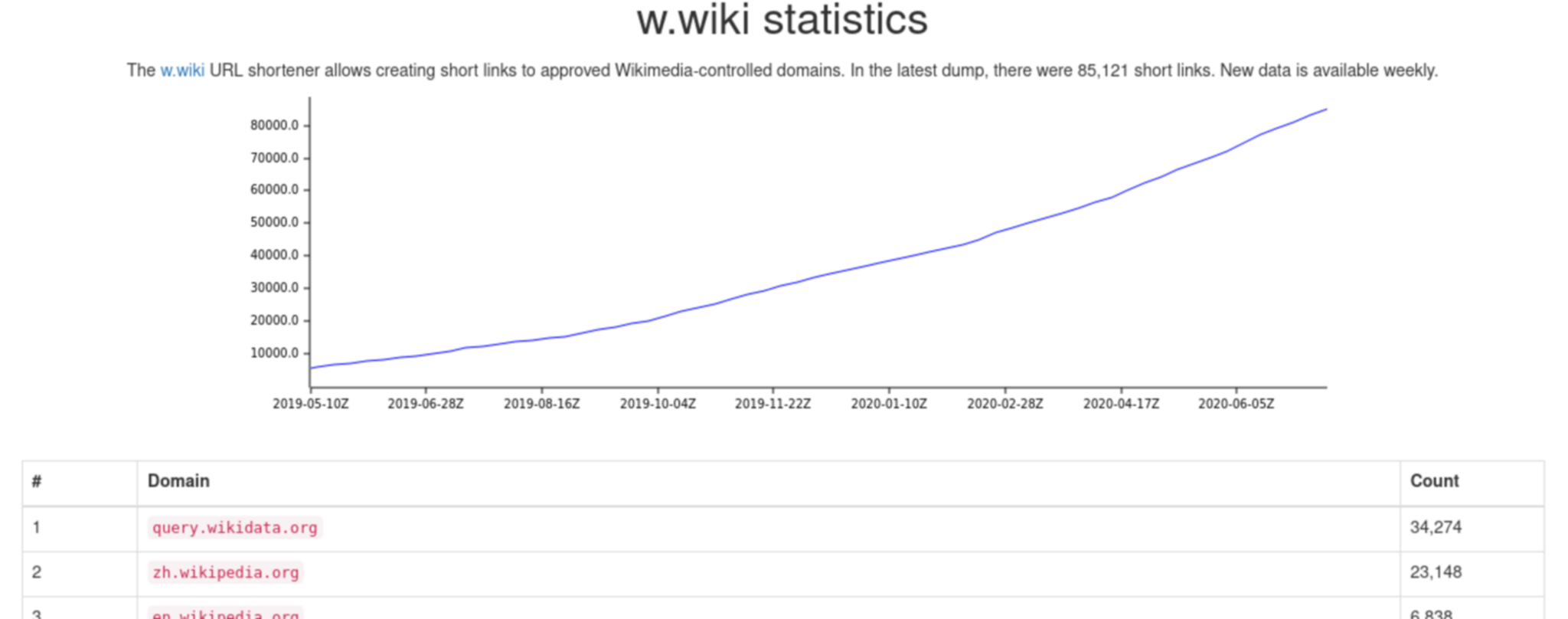

Visit the tool — Source code

shorturls is a tool that displays statistics and historical data for the w.wiki URL shortener. It's written in Rust primarily using the rocket.rs framework. It parses dumps, generates JSON data files with counts of the total number of shortened URLs overall and by domain.

On each page load, shorturls generates an SVG chart plotting the historical counts from each dump. To generate the chart, it would need to read every single data file, over 60 as of this week. On Toolforge, the filesystem is using NFS, which allows for files to be shared across all the Toolforge servers, but it's sloooow.

<+bd808> but this circles back to "the more you can avoid reading/writing to the NFS $HOME, the better your tool will run"

So to avoid reading 60+ files on each page view, I cached each data file in Redis. There's still one filesystem call to get the list of data files on disk, but so far that seems to be acceptable.

I used the redis-rs crate combined with rocket's connection pooling. The change was about 40 lines of code. It was a bit more invovled because redis-rs doesn't have any support for key prefixing nor automatic (de)serialization so I had to manually convert to/from JSON.

The data being cached is immutable, but I still set a 30 day expiry on it, just in case I change the format or cache key, I don't want the data to sit around forever in the Redis database.

Caching mostly static data in Redis is a great way to make your Toolforge tools faster if you are reguarly making SQL queries, API requests or filesystem reads that don't change as often. If you need help or want tips on how to make other Toolforge tools faster, stop by the #wikimedia-cloud IRC channel or ask on the Cloud mailing list. Thanks to Bryan Davis (bd808) for helping me out.

Originally posted on mastodon.technology.

Roughly 3 years since I started working on it, @kiwix is set to reenter #debian pending ftp-master review! https://ftp-master.debian.org/new/kiwix_2.0.4-1.html

Proper blog post to follow if/once it's accepted.

I'm skipped writing a post for week 4 and then didn't do any Rust related things for a week, so this is my week 5 update.

The main (published) Rust I've written since my last post is a port of my w.wiki statistics Toolforge tool. It reads through compressed plaintext dumps, parses URLs and aggregates counts per-domain to make a nice table. I used the flate2 crate for decompressing gzipped files and then the std::io::BufRead trait to read a file line-by-line.

It also has a slow-to-load chart that shows the increase in total shortened URLs since the start of the service. After looking through a few different plotting libraries, I ended up using plotters because it could properly chart timescale graphs. I think the graphs created by the charts crate look prettier but it wasn't flexible enough for this dataset. The chart is slow to load on Toolforge because it reads ~60 cache files, needing to hit NFS for each one.

I want to move the cache to redis, but the primary Rust redis library doesn't support having an automatic key prefix so I might end up writing a wrapper to do that.

In the future I want to provide charts for the individual domains and maybe a listing of recently shortened links for each domain, we'll see.

Because of how rocket's template system wants its structs to be serde-serializable, it becomes really straightforward to create a JSON API for every template-based endpoint. I had written a whole library (flask-dataapi) for this in Python, and now it's basically built-in.

I also submitted two OAuth2-related patches to Rust crates:

In terms of documentation, I've spent a decent amount of time improving my Rust on Toolforge wiki page, including some updates that came after debugging with other Rust users on IRC. I think it's in a state that we can link to it from the official Toolforge docs.

I'm a little behind with this update as it took me a little longer to prepare and launch my project: diff-libraries. I'm planning to write a more detailed post on that later, but it's my most involved Rust project so far. The webserver is powered by Rocket and it uses diesel for the SQL backend. I'm using Tera templates, which feel like Jinja2 templates, but are missing some of the nice Flask integrations like url_for.

I've gotten a pretty good setup going with GitLab-CI now: rust-ci-pipeline (the name and setup are inspired by what Debian has). There are still a few problems with cargo-tarpaulin segfaulting every now and then but I haven't been able to debug it yet.

I published my first real library crate too: eventstreams (docs). It's a wrapper around Wikimedia's new recent changes feed. I think the fact that docs.rs automatically builds documentation for every single library on crates.io, with no extra action needed is a real game changer. Even the auto-generated documentation is super useful, and it makes authors more likely to fill in the documentation knowing that someone will actually read it.

My goal for this past week was to learn async, and I kind of did, I ported subdown3 to be all async. I think I get the basics, but eh, over it for now. The fact that reqwest didn't let me incrementally migrate from it's blocking mode to the async version was frustrating, because it meant I had to port the entire codebase over to async before I could even get any of it to run to verify I was heading in the right direction.

Code written/released:

Libraries used:

Concepts learned:

- async/await (beginner)

std::thread- build.rs

- visibility in libraries/modules (

pub)

Next week:

- I want to have a better understanding of lifetimes, especially with regards to threads.

- @janriemer gave me some tips on mocking libraries that I still need to look into (thanks!).

I think I'm starting to understand why people like Rust so much. The tooling, especially rustup and cargo are pretty fantastic. The fact that rustfmt (code auto-formatter), clippy (linter) and a test runner are all integrated through cargo is super convenient. I feel like Python used to have that with setuptools/setup.py but over time that's been lost.

This week I ported my Gerrit helper grr to Rust, and wrote a Reddit downloader tool, subdown3, that I originally wrote in Python nearly a decade ago. subdown3 has straightforward command-line options and primarily deals with URL parsing and hitting various APIs. grr is a convenience wrapper around git that just shells out.

I'm also hosting my Rust projects on GitLab, primarily to take advantage of its CI features (which I don't feel like setting up for git.legoktm.com). I've been using cargo-tarpaulin to generate coverage for tests, which has been simple. No extra configuration or anything, you just run it.

One thing I've been struggling with is figuring out how to mock functions. Because grr primarily shells out to git, integration testing isn't that useful, but testing what exactly we're shelling out to is more useful.

Code written:

Libraries used:

Concepts learned:

- iterators

std::process::Command- publishing stuff on crates.io

Next week:

Originally posted on mastodon.technology.

Took me a while, but libkiwix9 is now waiting in Debian's NEW queue: https://ftp-master.debian.org/new/libkiwix_9.2.2+dfsg-1.html

Also, hopefully going to have another cool announcement in the future, still waiting on a PR to be merged :)