Encrypting a college newsroom with Signal

Originally published on freedom.press

Student journalists have it pretty rough. Most try to balance passing a full load of classes with running around to get the latest scoop, often without being paid.

Many programs are underfunded and, as we've previously discussed on this blog, lack any form of meaningful digital security training.

After interning at Freedom of the Press Foundation last summer, I was determined that when I took over being the Executive Editor of the Spartan Daily, San Jose State University's student paper, I would beef up our digital security.

Background#

First, I devised our risk assessment, analyzing who would be most likely to target our newsroom, and the data we wanted to keep secure. I wasn't too worried about three-letter agencies spying on us. I was concerned with our school administration. We had recently alleged that SJSU had misused millions of dollars of athletic donations, so it was in their interest to discredit us. As I considered our risk assessment, I realized our university already had access to our university-provided email accounts, Google Drive folder, the lab computers we used and even the keys to our physical newsroom.

The one thing they didn't have access to was our chat platform. Originally we used a combination of GroupMe and SMS/MMS for all types of remote communication. Those messages could be as innocent as, "Hey my story is ready for you to edit," to an urgent heads up like, "Police activity by the library, can someone go?" or even private discussions of sources.

In 2014 Edward Snowden informed the world just how insecure SMS is: Your messages are unencrypted and your phone company or the government can easily access them. In one of his disclosures, the NSA described SMS messages as "A Goldmine to Exploit."

GroupMe is owned by Microsoft and uses the Microsoft Privacy Policy, which allows for data sharing for advertising and marketing purposes as well as to: "Comply with applicable law or respond to valid legal process, including from law enforcement or other government agencies." Plus anyone in your group can receive their messages over SMS, opening them up to all of those problems too.

Neither of those two options provide a strong sense of security and confidentiality that we as journalists would like to have.

Beyond sensitive, editorial conversations, everything else in those chats were stupid jokes and memes. (What do you expect? We're college students.) But as Gawker taught us, bad jokes are no laughing matter for some newsrooms. Looking back at some of those old messages now, there are a few that make me cringe.

Evaluation#

So, strengthening our newsroom's group chat/texting platform was my main priority. These are specific requirements I came down to:

Required:

- Disappearing/expiring messages: Disappearing messages make our conversations ephemeral and can empower people to be more candid with what they say. I think it makes online conversations feel more like in real life, where you can still remember what the person said, but it's not being recorded forever. When not everyone can physically be in the newsroom at the same time, it allows us to have a similar atmosphere.

- Free (as in cost): College newsrooms are often underfunded and generally have less support resources than their professional counterparts. Also, I didn't want to have to worry that if the university was paying for a service, they would have access to it and therefore be able to read our messages.

- Installable by everyone, with a straightforward UX: It's hard to overstate how important having a good user experience was for this – if it wasn't straightforward for people to use, I would end up being tech support for most people, something I didn't want to do. (It inevitably happened anyway.)

Nice to have:

- Desktop support: Sometimes it's nice to type on a keyboard instead of a tiny phone. More practically, it allows sending messages during class when your laptop is already on your desk, but taking your phone out would appear rude.

- End-to-end encrypted: This wasn't a hard requirement because I really wasn't concerned about a compromise from the service provider, as long as disappearing messages were truly implemented.

- Free and open source software: As a believer in the free software movement, I prefer to use free and open source software whenever possible, and like to recommend it to others.

The best match:

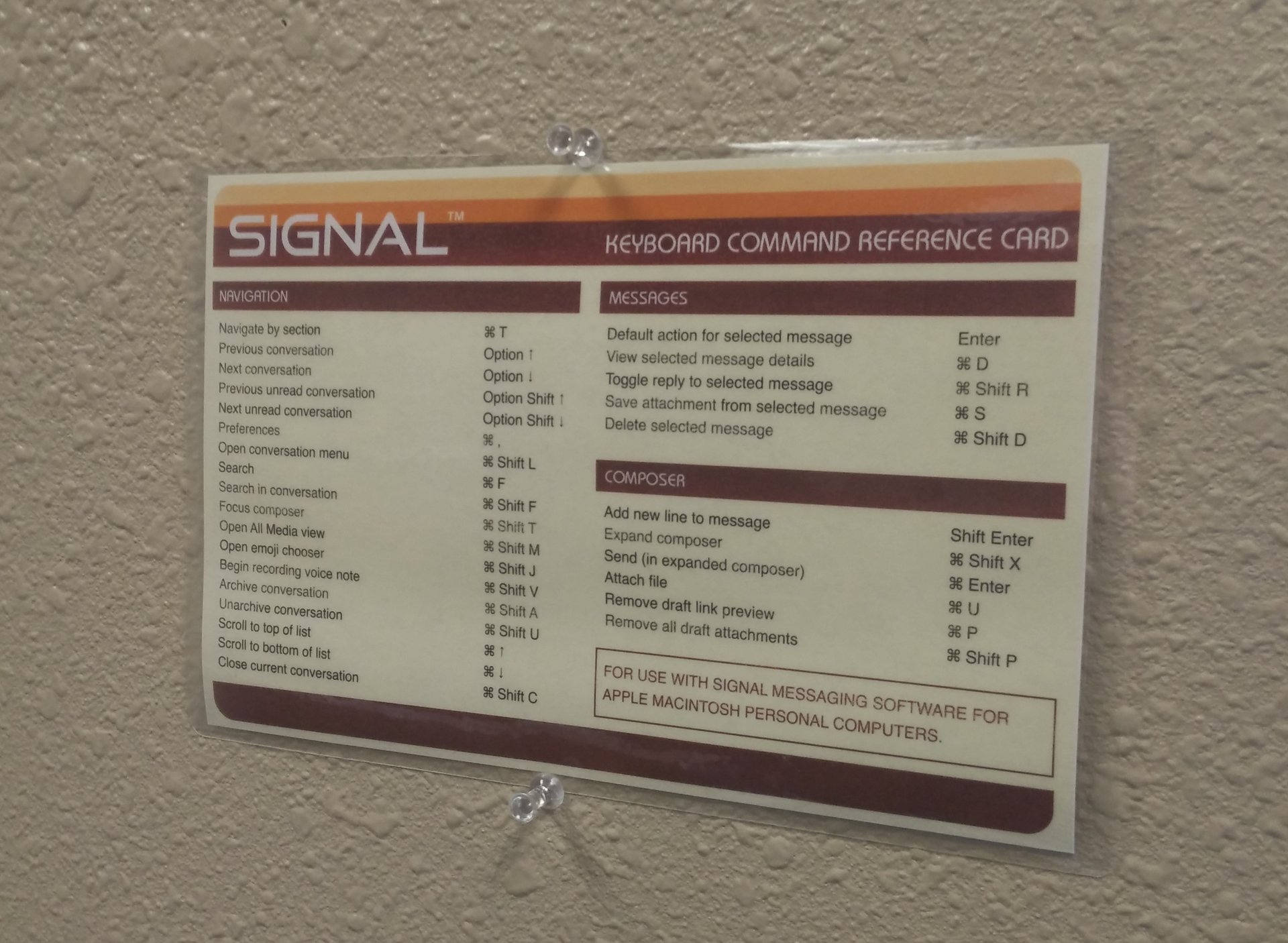

Signal fit really nicely into these categories, and I was already familiar with using and evangelizing it. After trying it out with a few editors over the summer the goal was set: Encrypt the newsroom's conversations with Signal.

Getting started#

I asked everyone to install the Signal app and then send me a message so I could add them to a group chat with the entire newsroom, but in practice it wasn't that straightforward. Some people needed to leave class early and missed my instructions. One person kept getting an error from the App Store when they tried to install Signal (their phone didn't have enough free space). I would regularly check the list of people in the group chat against the class roster, but unfortunately one person slipped through the cracks for a few weeks.

We had two main group chats, one for everyone and another just for editors. Both of those chats had disappearing messages set to retain messages for 1 week (the longest option), which worked out well. Today those two chat groups are basically empty, and while there might be some screenshots on peoples' phones, I feel confident that there are no significant copies lying around, waiting to be leaked.

Weaker points#

Can't default everything to Signal: One of the weakest points of using Signal was how one-on-one chats worked. While I could ensure that I used Signal to talk to everyone, I had little visibility/influence in how others talked to each other. Phones tend to steer people into the default system apps (iMessage/SMS), so only using Signal requires a conscious behavior shift for each person. A common example is the canned message you can send saying you're busy when declining a call.

Don't want everything to disappear: The bigger problem was that even for those who used Signal, configuring disappearing messages wasn't practical. You'd have to enable it for every single chat, but sometimes you'd want to talk about personal, non-newspaper things to that person that you didn't want to disappear. I rarely turned it on for individual chats myself.

Gotchas#

No automatic backups: As I learned, when people run into problems with an app, they sometimes will try a strategy of uninstalling the app and then reinstalling it. Unfortunately in the case of Signal, that means you've lost all your messages. This is a win for privacy, but very much not what users expect.

Requires an iOS/Android device: Not everyone has access to a smartphone. My plan was nearly foiled when I found out one of our reporters didn't have a smartphone, and couldn't download and use Signal (which wouldn't have been an issue on GroupMe). Luckily, they could use Signal on their old iPod Touch. Combined with campus-wide Wi-Fi, the solution was largely sufficient.

Lack of proper group management: You can't kick people out of group chats — they have to leave on their own. Thankfully, we did not have to kick anyone out, but we did come close to removing someone from our staff. We would have had to create a new group chat with everyone but that staffer and simply hope everyone else stopped using the old one.

Messages disappear too quickly: A week is rather short for messages to disappear, and if the conversation moves fast, people may forget that the older ones are gone. When setting up our "secret Santa," everyone posted in the group chat suggestions for what they liked. Not everyone jotted down what their person had said, so when they went to look back a week later… the messages were gone.

Conclusion#

If your top priority is to ensure that all your communications disappear consistently, Signal is probably not the best tool to set an organization-wide retention policy. But for resource-strapped college newsrooms, it does a decent job. There are plenty of other holes in our digital security, but we're better off than before.

Two important changes Signal could make that would have improved our experience would be to: (1) Offer a longer disappearing expiry, such as 1 month; and (2) Implement more robust group management features, which we hear are coming soon.

For an application known for its encryption, Signal is an accessible tool for non-technical users to set up. Recently added features, like reactions and stickers, make it more accessible and closer in feature-parity for people comfortable with other, less-secure messaging apps.

Despite reporting in a learning environment where digital security wasn't a priority, each student is now set up with, and has practical experience using, Signal. They now have a leg up on many of their peers, and can continue communicating with sources and other journalists in a secure manner.

Mauricio La Plante, who served as an editor alongside me at the Spartan Daily, said he found Signal very straightforward to use. Now a freelance reporter with San Jose Spotlight, he continues to use Signal during his reporting. "I would recommend it to any journalist I meet who doesn't already use it," he said.

If you're interested in getting started with Signal, check out our Signal for beginners guide.