Inside Scoop is a column about the operation of the Spartan Daily, San Jose State's student newspaper.

Each semester the Spartan Daily staff puts out a special issue that focuses on a specific topic or concept. It allows for an in-depth exploration of something that the San Jose State community is interested in. Previous topics have included race, gender and home. The special issue normally comes toward the end of the semester but we thought we'd do things a bit differently.

Rather than do one special issue - we planned on doing three. The first of them is what I want to break down today: Fighting 'fake news'. It won second place for best special issue in the 2020 Best of Show ACP San Francisco convention competition (here's what won first place), two steps up from our fourth-place finish for Heroic in 2019.

You can download a PDF copy of the issue to follow along.

The way we picked the special topics went differently this time around, and I think for the better. In the past topics were chosen during the semester based on what was going on as well as having the staff vote on it. Sometimes this worked, but I felt that if we spent more time selecting the topics, we could do a better job ensuring the content was cohesive and complete without significant duplication.

When we picked the topic over the summer, we thought we had the title too: Combatting 'fake news'. The word "combatting" is weird though, as it can also be spelled "combating". I definitely did not feel comfortable using a word in the title that people could (incorrectly) interpret as misspelled. Misspellings destroy our credibility ("if you can't even get the spelling right, how can we trust you to get the facts right?"), even the perception of one. So we switched the title to: Fighting 'fake news'.

The title takes a clear stance - "fake news" is bad and we need to fight it. There is an expectation that journalists will objectively cover their subjects, trying to stay as neutral as possible. But I think anything that threatens our mission of informing our audiences (restricting our First Amendment rights is a common example) is appropriate for a newspaper to take a stance on, even outside of the opinion/editorial pages.

The second problem with the title of the issue was whether to put "fake news" in quotes or not. We decided that upon first reference we would put it in quotes to indicate that we were referring to it as the term rather than literal fake news. Later references wouldn't use quotes because by then the reader should be able to understand what the meaning/intent is.

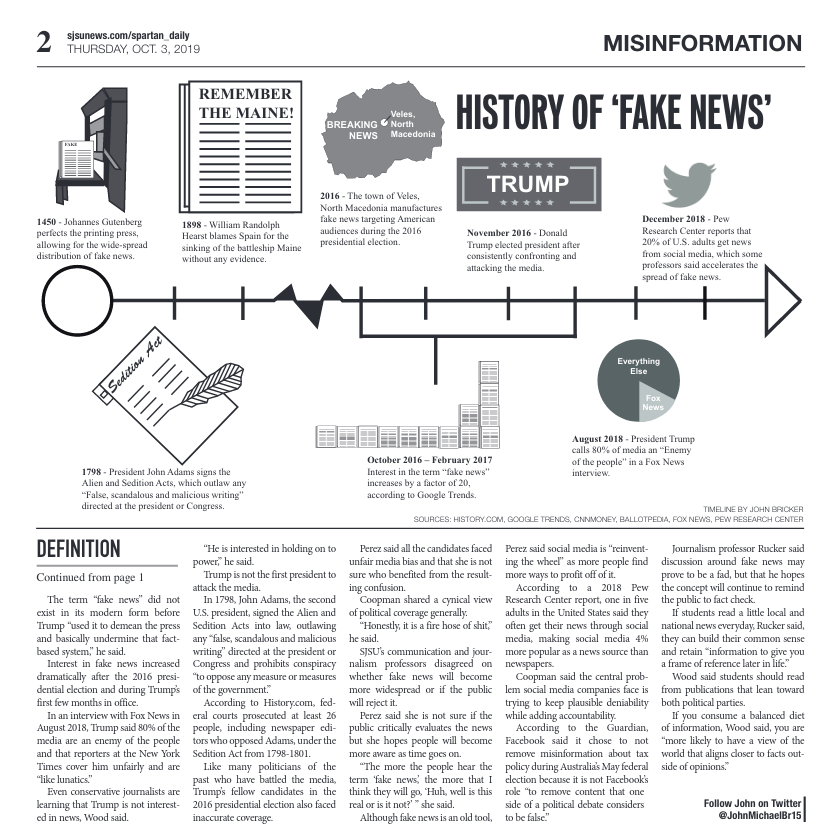

That leads us into the first story - what exactly does "fake news" mean?

I'm not sure how it happened, but John ended up writing the intro/lead story for two of the three special papers in addition to the lead story in our soccer season preview. And like usual, he did a pretty great job with balancing his own research with what SJSU professors (our experts) told him. Typically we have a blanket prohibition on interviewing journalism professors/students because it's "incestuous" (as said by Professor Craig) to be interviewing ourselves, but because journalism professors are subject-matter experts in this case, writers were given an exception this one time.

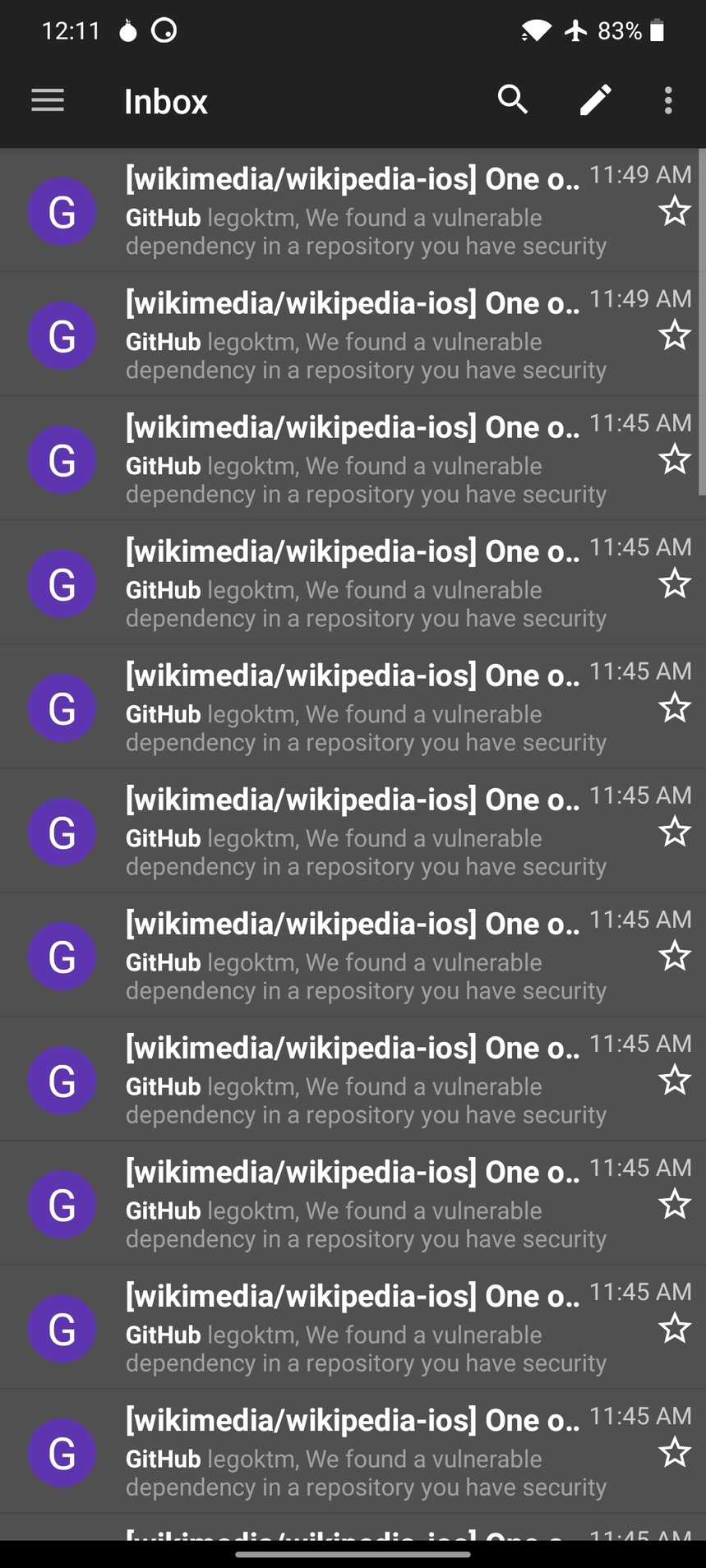

This was also the time we fully realized that John was designing his infographics in InDesign rather than Illustrator/Photoshop. I really like how the timeline visualizes all of the events covered in the story, starting with Gutenberg's invention of the printing press. We struggled a bit with how to represent two different timescales, as a significant amount of events happened very recently. Eventually we settled on the zig-zag line that you seen in graphs to indicate a shift.

My one regret is that the quote, "Honestly, it is a fire hose of shit," didn't make it into a pull quote.

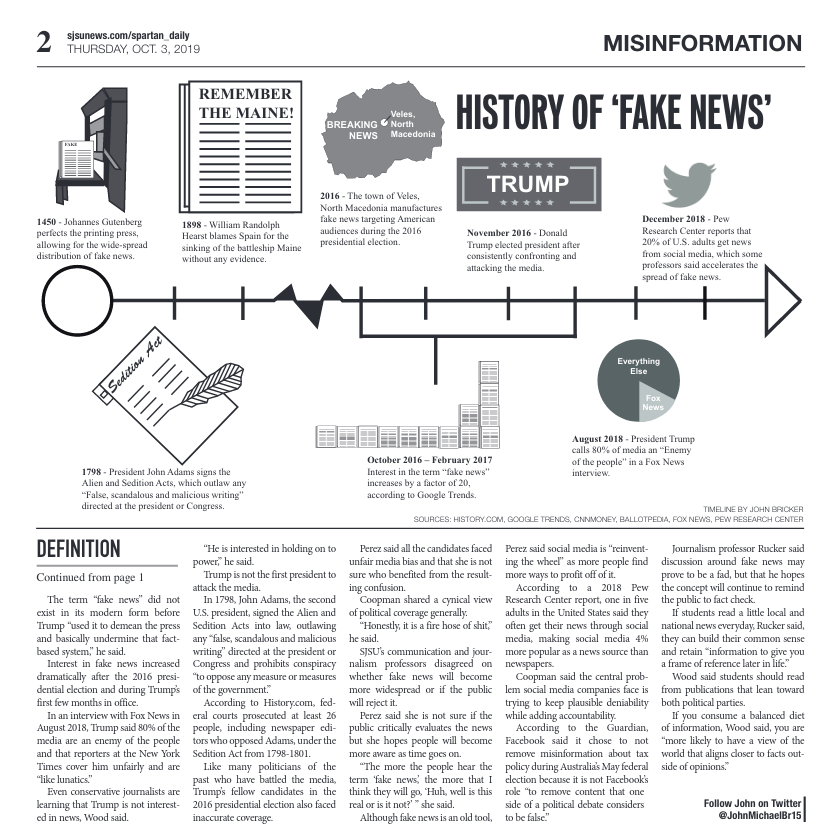

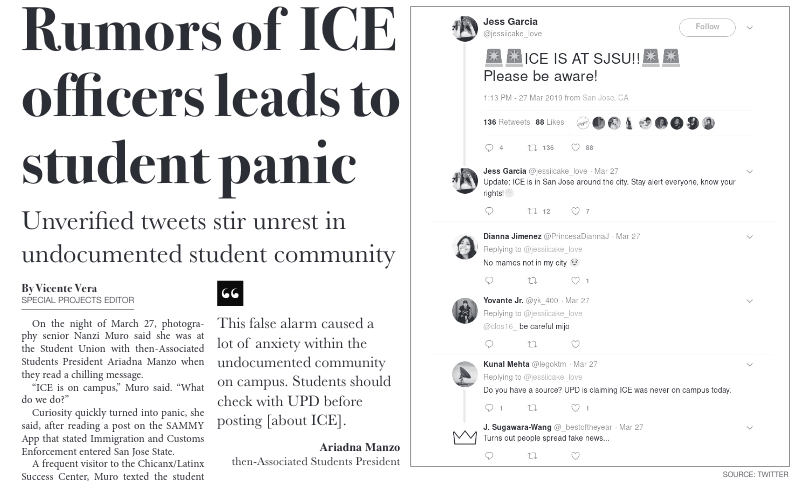

The next story about false ICE rumors spreading over Twitter was easily the most important story in this entire issue. As seen in the screenshot (or on Twitter itself), people falsely claimed on Twitter that ICE was on the SJSU campus, obviously scaring undocumented students. It's trivial to find other tweets that falsely claim this too.

There's no better case study for "fake news" than something concerning our own campus. Given that the Spartan Daily already covered the incident when the rumors first spread, Vicente's story retells the tale from a different angle: focusing on how the university fought the "fake news". This is the theme that all the remaining stories keep in mind - how do we as individuals and as a society fight "fake news". I do wish that we could have had an interview with someone who actually spread the rumors on social media though.

Echo chambers seem rather relevant to SJSU as most students lean progressive/liberal/left/etc. but there are definitely some people holding conservative/right/etc. views on campus. After defining a jargon-y term, Christian included insight from students on how they obtain their news, really priming the reader for the next section on Pages 4 & 5.

Melody's illustration was absolutely beautiful...and then we couldn't afford to run it in color. :( I also think the rest of the page ends up becoming a sea of gray - it would have benefited from a pull quote to break up the solid columns of text. That would have also forced us to shorten the story a bit and maybe make it slightly more concise.

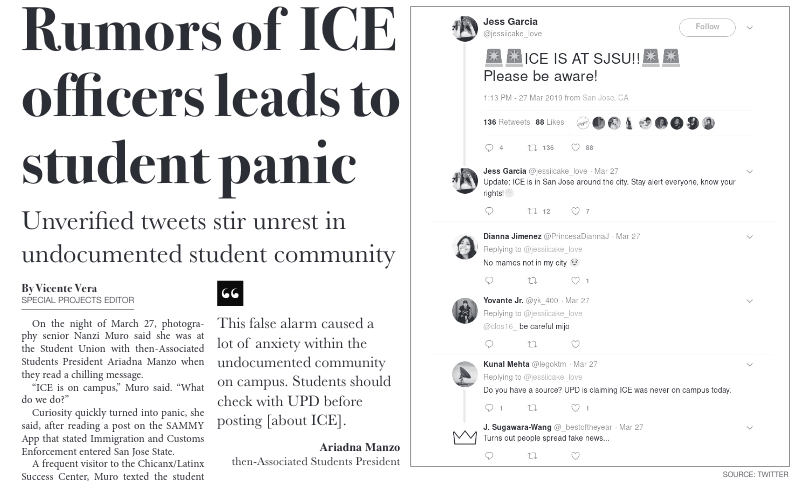

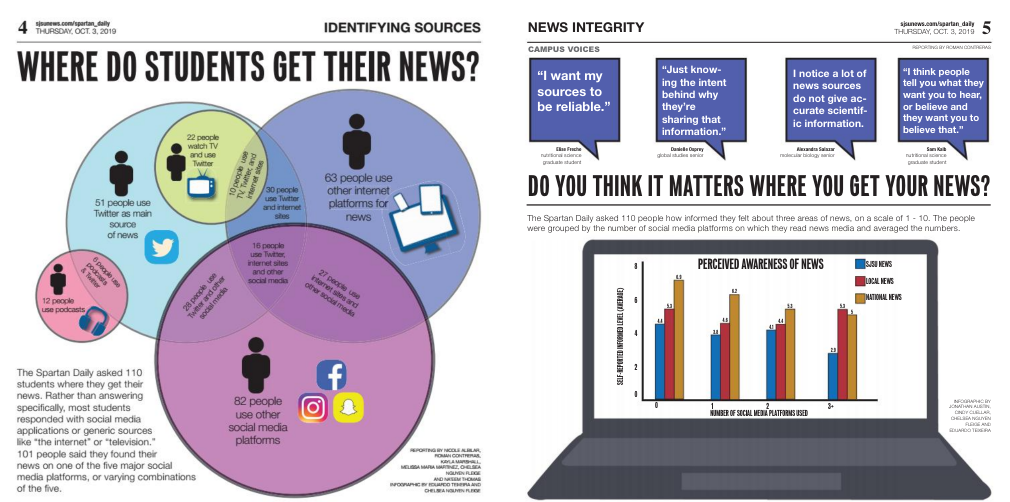

These two pages discussing where students get their news from are a beautiful mess that did not turn out the way any of us planned. The original idea was that we would survey students on where they get their news (e.g. CNN, NY Times, BuzzFeed News), and provide a ranking and analysis (e.g. AllSides media bias ratings) for each source. But that's not at all what happened. Most students returned the survey with the platforms (Twitter, Facebook, Instagram, etc.) they got their news from rather than the actual sources.

The resulting dataset was messy and overlapping, calling for one type of chart: a Venn diagram. Chelsea and Ed did a great job putting it together that night as people were still returning survey slips. Some really fantastic things can come out of being forced to work under pressure, but we should have had a better plan for this. We should have started the survey earlier and had a backup plan for what to do with that space if the data didn't come out the way we wanted.

The second half answered the natural follow-up question: does it actually matter? It was kind of a leading question, unsurprisingly everyone gave favorable answers to the point we were trying to push. Also we messed up in the layout, the headline with the question should have been above the speech bubbles.

The main take away I had was that if you run a free response survey, expect that the responses will be all over the place. Also to run a controlled test of the questions with friends or such before giving it to everyone.

The idea was that our guide would be the "if you take one thing away from this issue, it should be this." I think the content did that, but the framing/location in the issue wasn't ideal even though it's a logical follow-up from the previous infographic content. The infographic is unsigned as if it's an editorial statement, but it wasn't something the entire board worked on, just 3-4 editors did. Also putting the main takeaway on Page 6 probably isn't that great, I think it would have worked better on the back page (Page 8) with the editorial. We would have needed majority approval from the editorial board on the concept/text, but I think it would have been a good idea regardless.

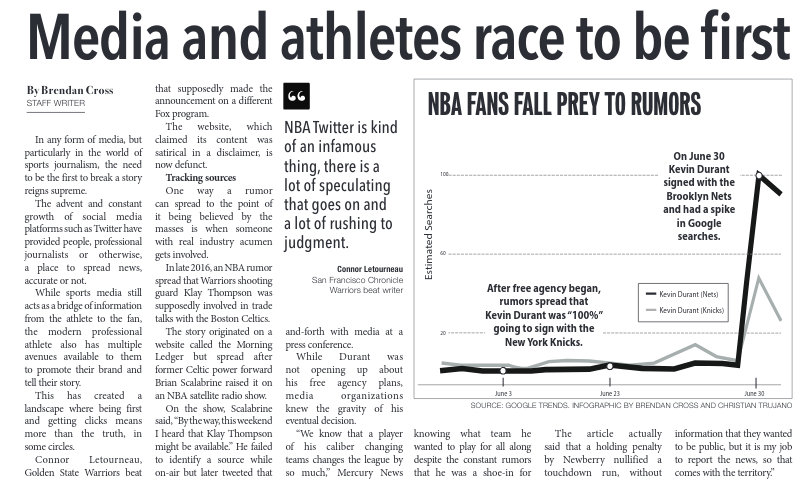

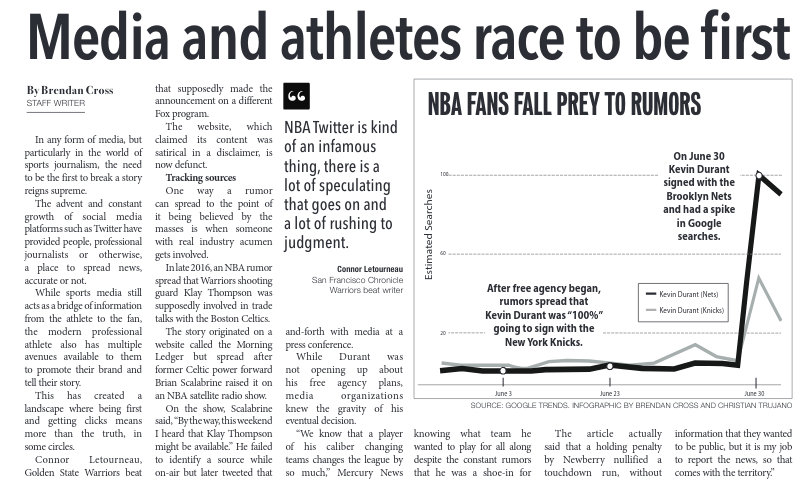

The evolution of this story was unexpected but great. Going into it, Brendan was going to reach out to coaches/players at SJSU as well as professional journalists to capture the story from both sides of reporting. We expected that it would be straightforward for him to get interviews at SJSU because we already have a good relationship with our athletics media relations team and a bit more difficult to get a hold of professional sports journalists ... but the exact opposite happened. CJ was able to put him in touch with multiple fantastic sources while we were struggling to get good quotes from SJSU coaches. Coincidentally we visited The Mercury News on Monday (the issue came out on Thursday) and while we took a tour around the building, a few of us hung back to interview two of their sports reporters.

Our biggest failing in this story was the lack of good artwork. The infographic was a last-minute thing because we needed some graphical element for the story, but at least to me it really looks like an afterthought. Had we moved the guide to another page, this story would have gotten an entire page and I think we could have done some kind of cool photo illustration with Durant and headlines.

Perfection is elusive. One of the hardest lessons for me to learn as executive editor has been that no matter how many checks or reviews we do, some things will just slip by everyone.

Somehow a faint gray border was accidentally set on the text box of Olivia's story, which pushed everything down a line so her story did not finish. It was fixed for the digital PDF copy, so I took a picture of the physical print copy to show it.

It's not productive for us to blame any specific person given the number of eyes that went over it and didn't notice (also blaming people in general is a terrible practice). But it's still frustrating enough that I don't really have any comments on the story itself. For a later special paper I had one of our editors go through all of the pages again before we sent them to the printer to double check stuff like this.

This story was not planned. I don't remember where we went wrong, but when we were laying out which story was going to go on each page, we ended up short one story in the opinion section. While normally I would have preferred a staff writer to fill in the gap, because we were very short on time Jonathan, the opinion editor, wrote the story himself. Plus he likes satire so it was a great fit.

I would also like everyone to know that there is a Wikipedia article titled 'No Way To Prevent This,' Says Only Nation Where This Regularly Happens about The Onion stories with the same name.

Before I get into discussing this page, I want to share an excerpt that explains my position on editorials from one of my favorite books, The Landry News.

(Maybe another time I'll write about how much I love editorials.)

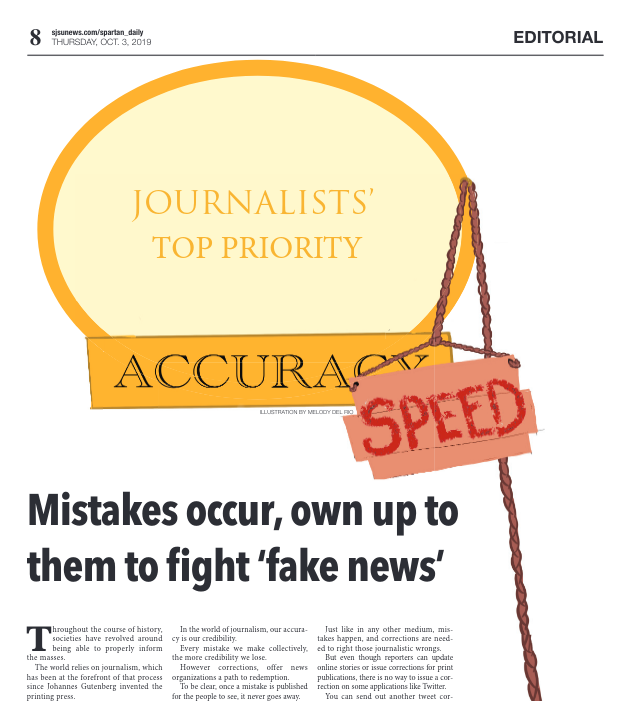

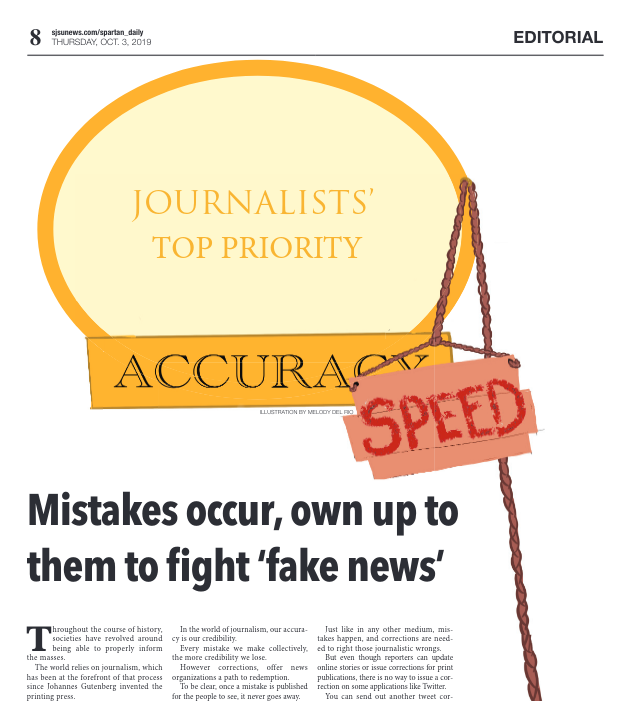

While most of the special issue addresses how consumers of news can do a better job avoiding "fake news", I think we tackle the hard issue that many journalists may not want to admit - the news media is also culpable in the rise of "fake news". As we explain how our correction policy works, I hope that came across as the heart of the Spartan Daily - as student journalists we do our best to get it right the first time around, but we will always publicly admit our mistakes and rectify them with the aim of doing a better job the next time.

For laying out the page, Melody really came in clutch and did a great job. We were expecting to have a half-page ad and then it fell through rather late in the day. Melody stepped up and had the attitude of "I get to draw something how big?" And finishing the page with the one correction we had to run that day just sealed the deal.

Overall, I do wonder how much this issue resonated with our primary audience, San Jose State students. I think we did well among one of our secondary audiences, SJSU professors, but that doesn't surprise me given this is a topic many of them are already interested in.

We tried to push the principle of doing things early and planning to give a better result and I think it mostly worked. Nearly all of the content finished going through the entire editing process by the weekend, which really helped Marci put together skeletons/templates of each page for editors to just paste in the final content. This allowed us to spend more time making minor tweaks to get the details right rather than having to just settle for the basics (of course, some stuff still slipped through - "founding founders" still gets to me).

One oddity of this paper was that there was only a single image in the entire issue (the teaser photo of Kevin Durant on the bottom right of Page 1). "Fake news" isn't really a photograph-able subject I suppose.

Because of the name of this issue, it's frequently referred to as "the fake news issue" (even I'm totally guilty of this), which is going to be a great way to be a great way to be remembered in Spartan Daily history: "Yep, that was the semester they put out a fake news issue."

Thanks to Victoria for reviewing and editing this post before publication.